Elon Musk was very clear at the Tesla Autonomy Days a few weeks ago, that LiDAR is just for ‘fools‘. Tesla is going to accomplish autonomous driving just with cameras, radar, ultrasound, and some smart algorithms. Now who’s right? Is it Waymo & Co, who are betting massively on LiDAR and put a lot of effort into developing that technology, or Elon Musk and those, who use cameras-first or cameras-only?

We have to know that LiDARs are using laser pulses to create a three dimensional picture of the world in form of a 3D point cloud. Cameras, on the other hand, only create a two dimensional, but colored and detail rich picture. By the use of multiple cameras, that are arranged for stereoscopic recording, we can also generate a 3D picture of the reality.

Now here helps a comparison with today’s state of the art. And that is what Nathan Hayflick from Scale AI reported about in his Blog and compared the results. Her took a dataset, that was annotated by Scale for Aptiv, with objects on public roads that had been recorded both by LiDAR and cameras.

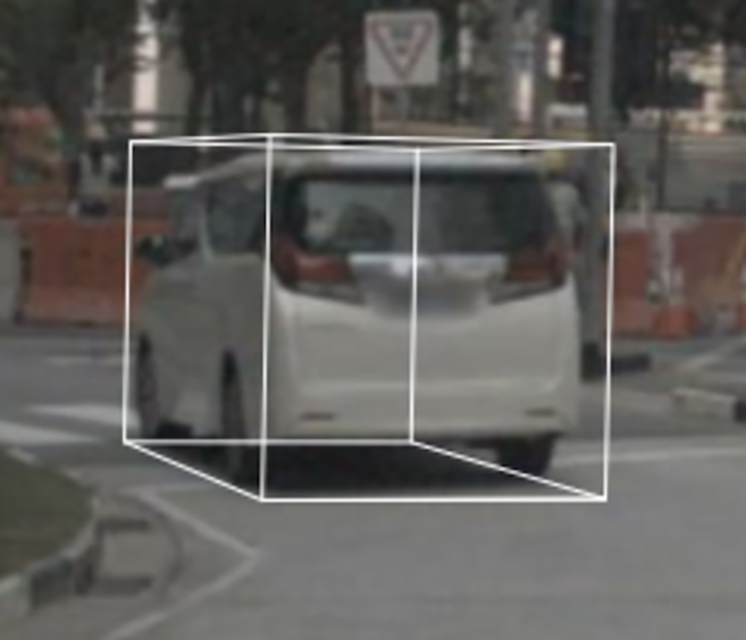

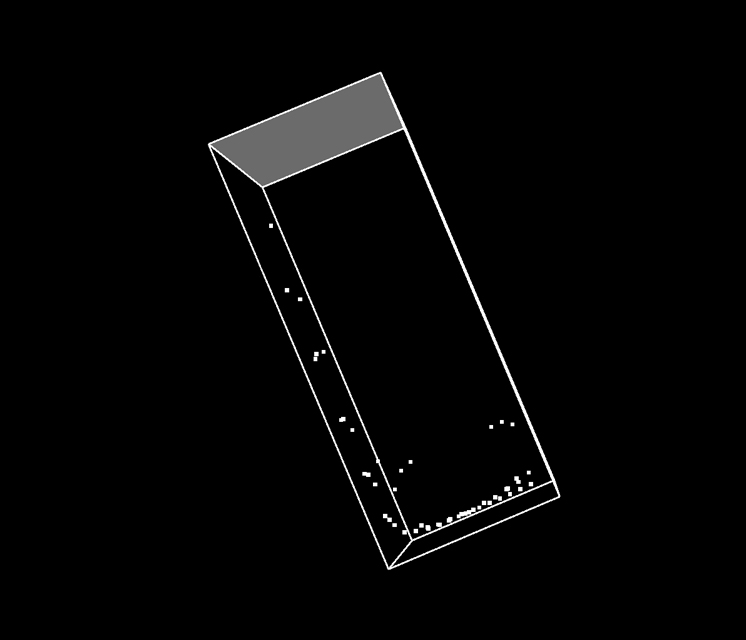

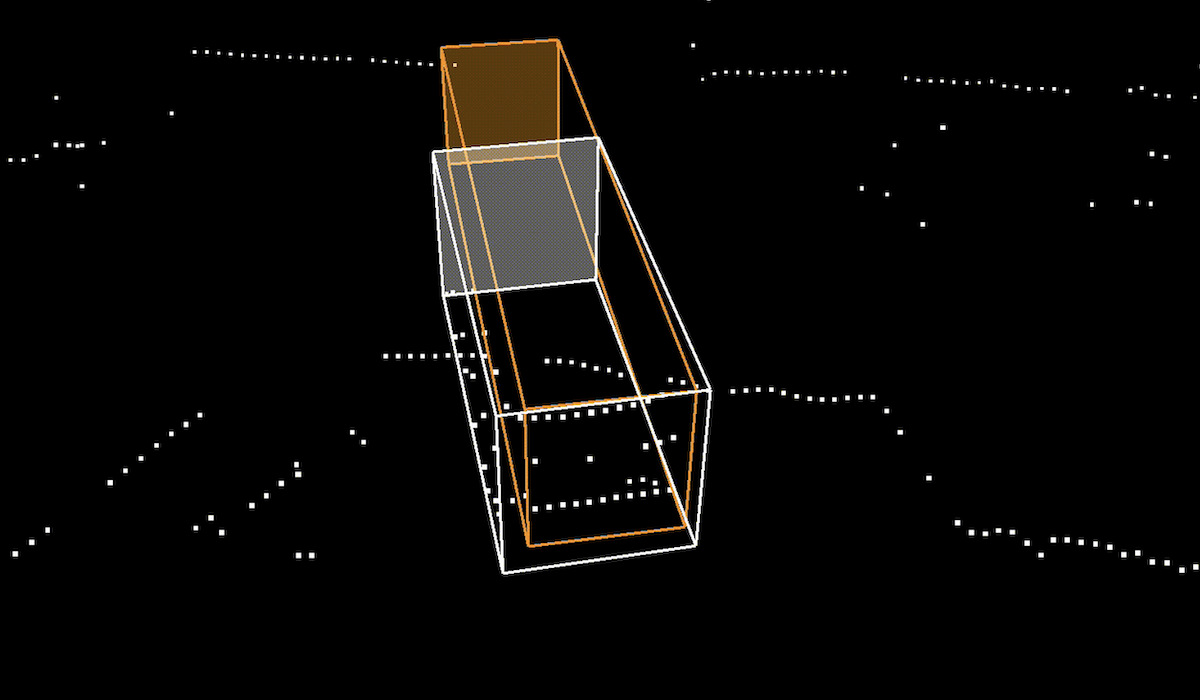

We can see a first result in the pictures below. While the annotations look good for both types of sensors and sensor-combinations, the difference becomes obvious, when we look from top down and transform the boxes into 3D objects.

|  |

| A vehicle annotated from video-only. | Top-down view overlaid on LiDAR. |

|  |

| The same vehicle annotated from a combo of LiDAR + Video more accurately captures the width and length of the vehicle. | Top-down view with LiDAR. |

Small pixel errors are having huge effects on the objects. The car shown is almost twice as long and narrower.

That has nothing to do with the quality of the annotations, but cameras can only record 2D information. Some of the corner pixels may be hidden or obfuscated and even small deviations lead to larger deviations from the real size.

Other scenarios that Nathan Hayflick discussed included drives at night and objects partly hidden by bushes and other natural vegetation. The cameras record fewer information, while LiDAR can see at night as well as during day, and can also partly ‘see‘ through foliage.

The quintessence is that more sensor types available lead to a better result. On short term, there is no larger improvement to be expected from camera technology, and therefore no better model and result with machine learning systems. Autonomous vehicles basing their navigation on cameras only will more often encounter scenarios, where they may encounter more difficulties.

Read Nathan’s full blog here.

This article was also published in German.

You ignored stereoscopic perspective in the smart camera system, while giving LIDAR a new magical ability to pass through the bus & detect the unsensable far side. Your simulation is as flawed as your logic.The best that could be predicted is a tie from a mere snapshot. PLUS YOU ALSO failed to take into account any video effect giving an AI ability to compare adjacent strobe frames to consider the bus as a moving entity with direction & speed.

LikeLike