With the launch of Tesla’s Robotaxi service in Austin, debates about the sensors used are once again gathering pace. Some are firmly of the opinion that Tesla’s approach with cameras alone will not be enough and cite plenty of examples of how Tesla’s Full Self Driving (FSD) makes mistakes, while others believe that Tesla’s approach must work because people would also drive “with eyes only”.

But what is true? Who is right? I want to look at three aspects. Firstly, the hardware equipment of Waymo and Tesla. Secondly, what advantages and challenges there are with the respective approaches. And thirdly, how Waymo and Tesla approach development, because these also differ massively.

What I won’t go into are the ML systems and simulations, which also require a lot of money and effort to make sense of the data collected and to be able to run through millions of variants.

1) Sensor Approaches

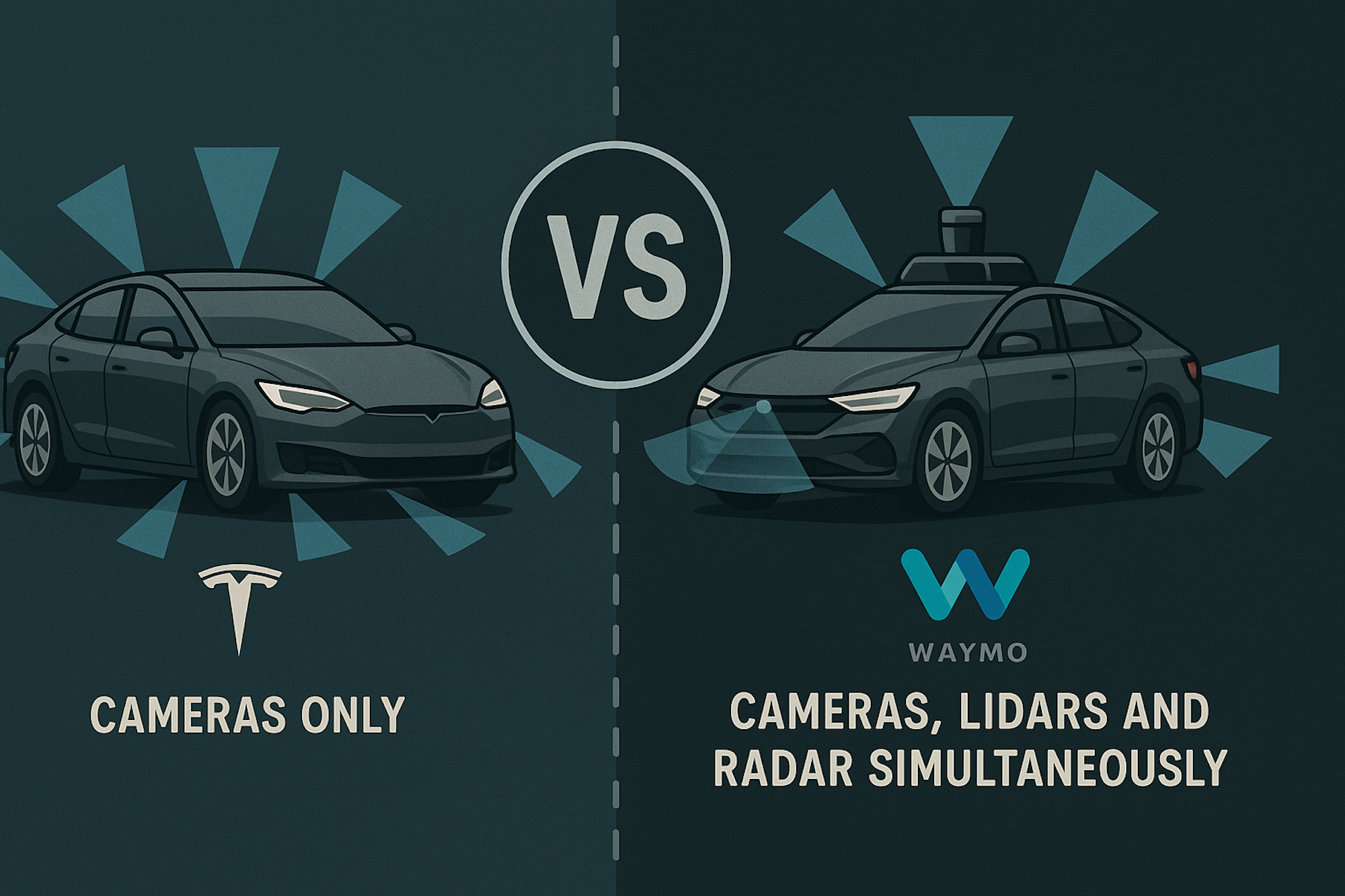

Roughly speaking, there are two dominant philosophies:

Sensor Approach Waymo

Waymo and most other developers of self-driving technology systems use a combination of several sensor modalities. In most cases, they include multiple cameras, lidars and radars. In some cases (like Zoox) even thermal cameras.

The cameras themselves can be those with different resolutions or those with wide-angle lenses.

Bei den Lidars wiederum können sie weiter (wie beispielsweise bei Waymo dasjenige auf dem Dach mit einer Reichweite von 500 Metern, ein Long Range Lidar) und kürzere wie das Laser Bear Honeycomb Lidar), die zwischen 95 und 360 Grad der Umgebung abbilden können.

There are also variations in radar, from traditional 3D radar to the new 4D radar, which records the speed and direction of an object in addition to the three spatial dimensions.

The sensors themselves require additional installations for use in all weather conditions, such as windshield wipers, heaters or cooling devices, so that they can also measure reliably and accurately within a temperature range.

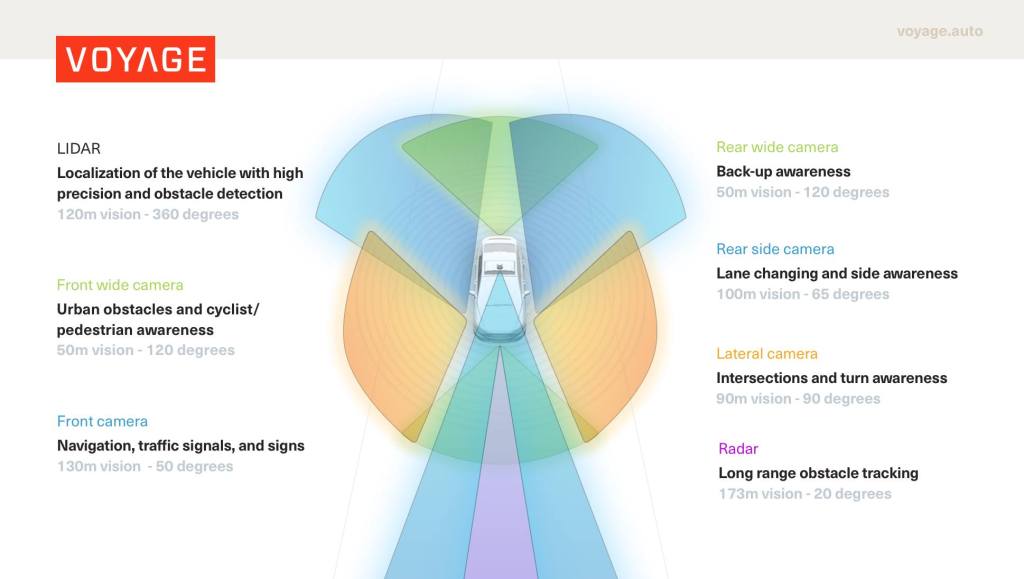

All sensors together detect 360 degrees around the vehicle, as shown in the following image as an example.

The data supplied is then processed by powerful processors that also control the vehicle. These systems, as well as the brakes and steering, must be designed with redundancy in order to still have a backup system in the event of a failure and to be able to safely steer the vehicle and bring it to a stop.

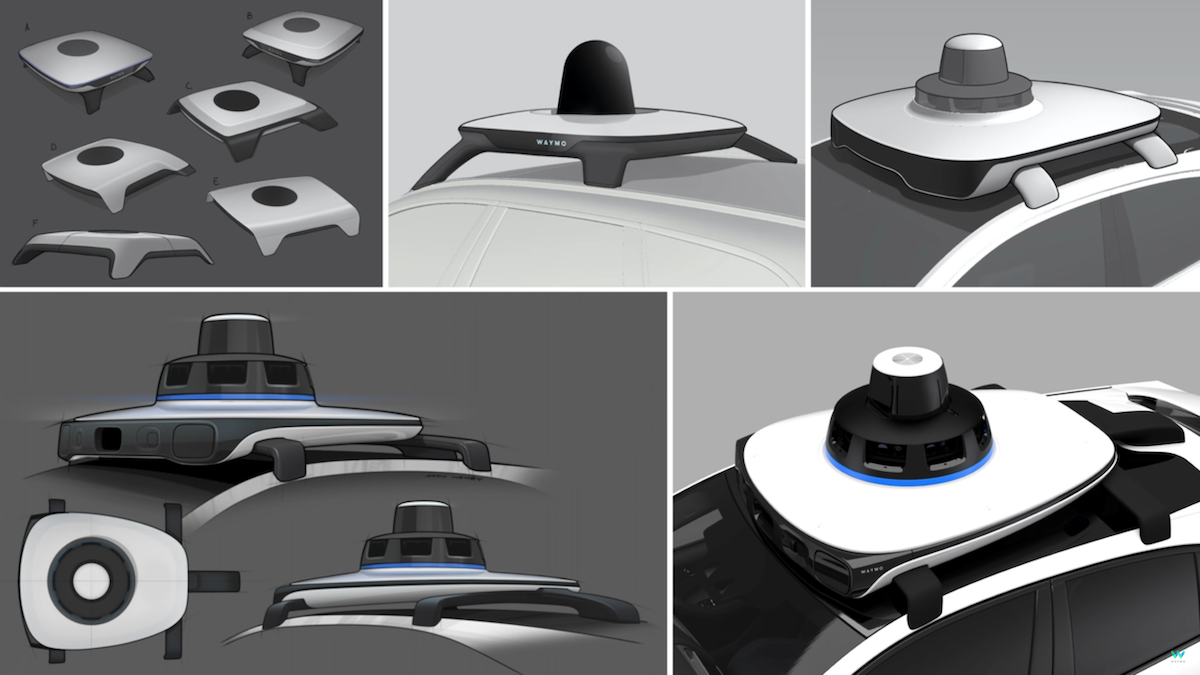

While the Waymo Driver – Waymo’s name for its self-driving technology package – still has 29 cameras, 5 lidars and 6 radars in the fifth generation (as seen on the current Jaguar iPace), there are only 13 cameras, 4 lidars and 6 radars in the sixth generation. This reduces costs and maintenance effort. However, these sensor superstructures are still quite bulky and make the car unsightly for car enthusiasts.

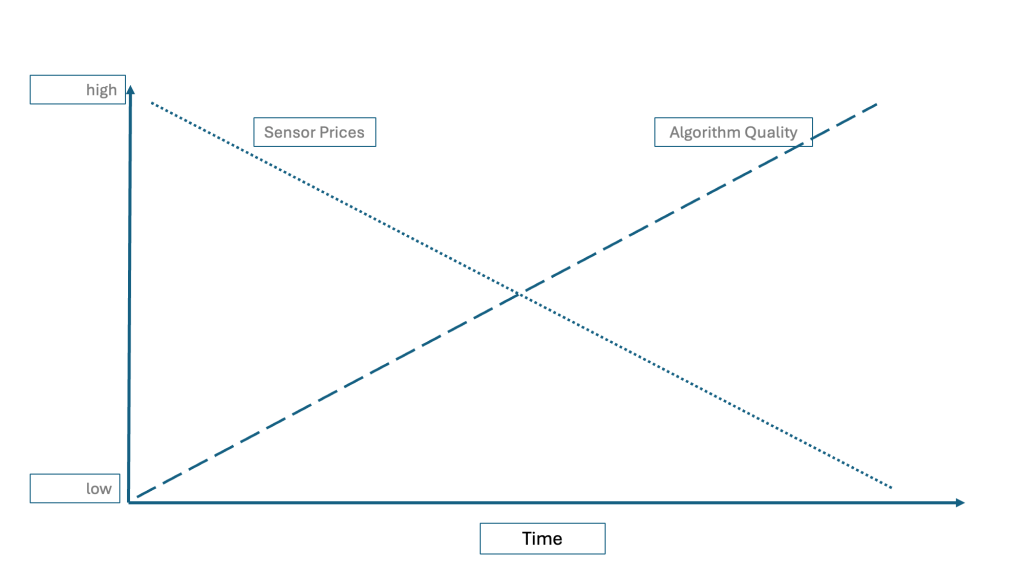

It is currently assumed that a vehicle like the Jaguar iPace with the sensors on board will still cost around $200,000 each. Quite expensive for a robotaxi, unaffordable for a private vehicle. But as always with electronics, prices are falling rapidly. Whereas just a few years ago a lidar (as shown in the following video) easily cost $75,000 each, prices today are well under a tenth of that price.

Tesla Sensor Approach

Tesla and a few other manufacturers (such as AutoX originally) pursue a cameras-only (or cameras-first like AutoX) approach. The cameras-only approach uses only cameras that capture the surroundings in a 360-degree view. In the case of Tesla, there are 8 cameras: 3 at the front, 1 each on the left and right behind the front tire looking backwards, 1 each on the B-beam looking forwards, and a backup camera with a wide-angle view. A further, ninth camera is located inside the car behind the rear-view mirror, which monitors the driver and interior.

Although Tesla had radars installed in the vehicle until around 2020, they were no longer installed until recently. Allegedly, they are now installed again, but according to Tesla, they will not be used for autonomous driving. Tesla sensors are seamlessly integrated into the car and are therefore almost invisible. In any case, they have little effect on the shape of the vehicle, unlike Waymo.

Teslas are also equipped with two powerful GPUs manufactured by Tesla, which process the data and control the vehicle.

It is currently assumed that the cost of Tesla’s hardware kit will be in the low four-digit dollar range.

2) Advantages and Challenges

Each of these approaches has advantages, but also faces challenges.

Waymo Approach

Waymo’s approach first requires some investment in hardware. Vehicles have to be purchased, equipped with the sensors and then put on the road. Now many, preferably millions of kilometers have to be driven with safety drivers in different geographies and under different traffic and weather conditions.

All the sensors generate terabytes of data per hour, which have to be saved, downloaded and stored by the vehicle, and then processed in the ML systems and in the simulator.

Depending on the sensor, this data is very precise. They can detect the position of kerbs or road markings down to the millimeter. Waymo then uses this data to create high-precision navigation maps that are very different from those intended for humans. With GPS, other digital maps, text recognition on buildings or signs and even the recognition of typical local landmarks (McDonald’s sign, clock tower…), a vehicle can determine its exact position, even if one factor (such as GPS signal in cities with high-rise buildings) only provides imprecise information or none at all.

These high-precision navigation maps must be created before a robot taxi service starts operating by means of so-called “mapping runs”. This involves sending the robotaxis out on the road with safety drivers and driving along all the roads for several weeks to collect data. This data is then compiled into navigation maps and uploaded to all vehicles.

With the millions of kilometers that vehicles cover in this way, many marginal scenarios are also recorded. These are situations that do not occur so frequently and which the vehicles only encounter gradually. I also refer to them as the “short nose“. One of these famous examples is that of the elderly woman in the electric wheelchair who drove around in the middle of the road trying to scare away a duck with a broomstick.

Here are dozens more examples of such edge cases, which vehicles only encounter after tens of millions of kilometers of driving in real traffic and which must be handled safely by the autonomous car.

Thanks to the different sensor data, the vehicle obtains a more precise picture of its surroundings and road users. Each type of sensor behaves differently. If a camera cannot see through fog or a sandstorm, this does not apply to radar or lidar. They are not affected by this. Conversely, these two provide fewer pixels than cameras, which can have a higher resolution. And cameras provide color information.

However, more sensor modalities (cameras AND lidar AND radar) does not mean that everything is automatically safer. First of all, there is a real risk of more errors being built in because the software becomes more complex. In combination with all these sensor modalities, the question always arises as to which sensor is right if the sensor types report different things. Camera says yes, lidar says no. Who is right? Thanks to the exponentially increasing combinatorics, it will never be possible to test all cases.

The Waymo approach is therefore a slow, expensive and complex one that focuses on region after region. However, if all goes well, it is safer and probably the best approach in the short term. However, a lot of money and effort is required to be able to scale up, despite ever-improving tools and experience.

Tesla Approach

Tesla had to make a decision that was different from Waymo’s. The company decided to install the Tesla hardware kit in the form of 8 cameras and two GPUs in all vehicles and deliver it to customers. Thanks to over-the-air updates (OTA), Tesla can not only upload software and data to customer vehicles, but also download telemetric and other driving data (video) from customer vehicles. Tesla therefore has access to billions of kilometers of data from the more than 8 million vehicles currently sold and equipped with the hardware kit in customer hands. And this is not just video data, but also telemetric data.

And here we come to an important point: people don’t just drive with their eyes (= camera), they drive with their whole body and therefore receive further information. Noise, vibrations, acceleration. Modern cars today also record all this data, such as lateral acceleration to trigger airbags, etc. Tesla’s approach therefore encompasses more than just camera data.

Only with enough vehicles sold with the hardware kit has Tesla received more and more data from customer vehicles over time. From a tipping point, however, Tesla receives vast amounts of driving data. Admittedly, however, this data is of lower quality than that available to Waymo. The resolution and frame rates of the videos are lower, and telemetric data does not always fill this gap.

What Tesla has lost in data quality here has to be made up for by algorithms. For example, where the size of an object (e.g. truck) can be captured well thanks to lidar, small pixel errors with the cameras result in incorrect sizes, as Scale.AI has shown.

However, past experience has shown that algorithms are constantly improving and can ultimately overcome all disadvantages. We saw this with video compression algorithms, for example. Thanks to data lines with constant transmission rates, we can send more video data through than ever before thanks to clever compression algorithms.

What Tesla seems to be struggling with today, and as is currently evident in Austin, is camera glare caused by backlighting (sun, oncoming traffic). However, this can also be solved algorithmically by working with adjustments to the camera settings. Rain should also be solvable, on the one hand by adapting the use of the windshield wipers, on the other hand Tesla has its own ML system called DeepRain.

Tesla, unlike Waymo, does not create high-precision navigation maps in advance, but individually for each car while driving. The assumption here is that the environment changes too quickly due to construction sites, barriers, seasonal conditions such as leaves that suddenly lie on the roads in the fall and obscure lane markings. However, such an approach requires a lot of reliable computing power, and Tesla is still struggling with this.

I’d like to reiterate the bet Tesla has made here and how it differs from traditional manufacturers’ approaches. Tesla made the decision years ago to install a hardware kit costing several thousand dollars in all vehicles, for which the software for the actual purpose – autonomous driving – is not even ready yet. And you don’t know when it will be ready and this function can be used by customers. Try persuading the management levels at traditional manufacturers to take this approach and you’ll be laughed out of the door or out of a job.

We can already drive in fog when human drivers are behind the wheel. Even if we can’t see that far then, in most cases one thing is done: the speed is adjusted. We drive more carefully. So why shouldn’t this method, which is suitable for humans, also work for the Tesla approach?

3) Development Approach

The third point I would like to raise is one that has not been recognized in the whole debate. It concerns the geographical scope of the two approaches.

Waymo: Bringing the Pot to the Boil

Waymo is taking on one city and one region at a time to offer its robotaxi service. These areas are clearly defined regionally (geofenced) and are gradually being expanded.

Waymo currently has everything under control, as the robotaxi fleet is operated either as Waymo (SF, Bay Area, LA, Austin, Phoenix) or under the label of a partner such as Uber (Atlanta).

Waymo boils water one pot after another.

Tesla: Boil the Ocean

Tesla not only collects data from customer vehicles that are on the road in clearly defined regions, but wherever they are on the road. Today, this means mostly throughout North America, Europe, Asia and Australia. Tesla must therefore try to take into account all the differences that exist in all the countries covered. This is a much more complex task. City, country, left-hand and right-hand traffic, widely differing, including extreme weather conditions, or the behavior of people in different cultures. A Herculean task, as you can imagine.

This is why progress in Tesla’s approach seems so slow. But the steady drip wears away the stone, and at some point a status will be reached where, with the flick of a switch, all Teslas driving anywhere in the world will be able to drive autonomously.

Tesla is trying to boil the whole ocean at once.

TLDR

If you account for factors such as

- price of Lidar (and any additional sensor modality such as thermal/infrared cameras);

- algorithms getting better for cameras.

and more sensor modalities meaning

- more software code increasing complexity of software stack;

- adding complexity also means introducing more software bugs;

- dealing with conflicting signals (camera says yes, Lidar says no – whom do you trust) – you need software routines to make the corresponding decisions (again with introducing more software bugs);

- higher costs of maintenance (calibration, cleaning, error analysis…);

- different behaviors in various ranges of temperature, light conditions, speeds;

- increased power requirements from the car;

- increased data flow, requiring processing and storage capabilities.

you quickly see that a statement saying that multiple sensor modalities automatically are the better choice is not such a clear cut picture anymore.

Summary

All of the above leads us to the following summary:

- Both approaches will be successful;

- The Waymo approach is much more expensive today than Tesla’s approach. The former is only affordable for robotaxi fleets, the latter for all cars (private cars);

- In the short term, the Waymo approach is safer and a geofenced region can be put into operation more quickly. In the medium and long term, the Tesla approach will also be safe enough thanks to ever-advancing algorithms;

- Waymo is targeting robotaxi fleets, Tesla is targeting both private cars and robotaxi fleets;

Who can capture more market share depends above all on who wins the race to answer the following question: Will a Waymo sensor stack become cheaper more quickly and therefore ready for use in many vehicles, or will the algorithms in the Tesla approach become better and safer more quickly?

One more thing

Kyle Vogt, founder and former CEO of Cruise Automation, talks about these differences:

This article was also published in German.

2 Comments